Fourier Neural Operator for Parametric Partial Differential Equations

Papers and Code

Discontinuous Galerkin finite element operator network for solving non-smooth PDEs

Jan 07, 2026We introduce Discontinuous Galerkin Finite Element Operator Network (DG--FEONet), a data-free operator learning framework that combines the strengths of the discontinuous Galerkin (DG) method with neural networks to solve parametric partial differential equations (PDEs) with discontinuous coefficients and non-smooth solutions. Unlike traditional operator learning models such as DeepONet and Fourier Neural Operator, which require large paired datasets and often struggle near sharp features, our approach minimizes the residual of a DG-based weak formulation using the Symmetric Interior Penalty Galerkin (SIPG) scheme. DG-FEONet predicts element-wise solution coefficients via a neural network, enabling data-free training without the need for precomputed input-output pairs. We provide theoretical justification through convergence analysis and validate the model's performance on a series of one- and two-dimensional PDE problems, demonstrating accurate recovery of discontinuities, strong generalization across parameter space, and reliable convergence rates. Our results highlight the potential of combining local discretization schemes with machine learning to achieve robust, singularity-aware operator approximation in challenging PDE settings.

CompNO: A Novel Foundation Model approach for solving Partial Differential Equations

Jan 12, 2026Partial differential equations (PDEs) govern a wide range of physical phenomena, but their numerical solution remains computationally demanding, especially when repeated simulations are required across many parameter settings. Recent Scientific Foundation Models (SFMs) aim to alleviate this cost by learning universal surrogates from large collections of simulated systems, yet they typically rely on monolithic architectures with limited interpretability and high pretraining expense. In this work we introduce Compositional Neural Operators (CompNO), a compositional neural operator framework for parametric PDEs. Instead of pretraining a single large model on heterogeneous data, CompNO first learns a library of Foundation Blocks, where each block is a parametric Fourier neural operator specialized to a fundamental differential operator (e.g. convection, diffusion, nonlinear convection). These blocks are then assembled, via lightweight Adaptation Blocks, into task-specific solvers that approximate the temporal evolution operator for target PDEs. A dedicated boundary-condition operator further enforces Dirichlet constraints exactly at inference time. We validate CompNO on one-dimensional convection, diffusion, convection--diffusion and Burgers' equations from the PDEBench suite. The proposed framework achieves lower relative L2 error than strong baselines (PFNO, PDEFormer and in-context learning based models) on linear parametric systems, while remaining competitive on nonlinear Burgers' flows. The model maintains exact boundary satisfaction with zero loss at domain boundaries, and exhibits robust generalization across a broad range of Peclet and Reynolds numbers. These results demonstrate that compositional neural operators provide a scalable and physically interpretable pathway towards foundation models for PDEs.

Intrinsic-Metric Physics-Informed Neural Networks (IM-PINN) for Reaction-Diffusion Dynamics on Complex Riemannian Manifolds

Jan 07, 2026Simulating nonlinear reaction-diffusion dynamics on complex, non-Euclidean manifolds remains a fundamental challenge in computational morphogenesis, constrained by high-fidelity mesh generation costs and symplectic drift in discrete time-stepping schemes. This study introduces the Intrinsic-Metric Physics-Informed Neural Network (IM-PINN), a mesh-free geometric deep learning framework that solves partial differential equations directly in the continuous parametric domain. By embedding the Riemannian metric tensor into the automatic differentiation graph, our architecture analytically reconstructs the Laplace-Beltrami operator, decoupling solution complexity from geometric discretization. We validate the framework on a "Stochastic Cloth" manifold with extreme Gaussian curvature fluctuations ($K \in [-2489, 3580]$), where traditional adaptive refinement fails to resolve anisotropic Turing instabilities. Using a dual-stream architecture with Fourier feature embeddings to mitigate spectral bias, the IM-PINN recovers the "splitting spot" and "labyrinthine" regimes of the Gray-Scott model. Benchmarking against the Surface Finite Element Method (SFEM) reveals superior physical rigor: the IM-PINN achieves global mass conservation error of $\mathcal{E}_{mass} \approx 0.157$ versus SFEM's $0.258$, acting as a thermodynamically consistent global solver that eliminates mass drift inherent in semi-implicit integration. The framework offers a memory-efficient, resolution-independent paradigm for simulating biological pattern formation on evolving surfaces, bridging differential geometry and physics-informed machine learning.

Light-Weight Diffusion Multiplier and Uncertainty Quantification for Fourier Neural Operators

Aug 01, 2025

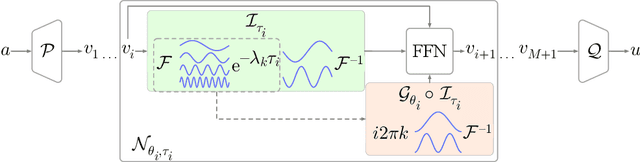

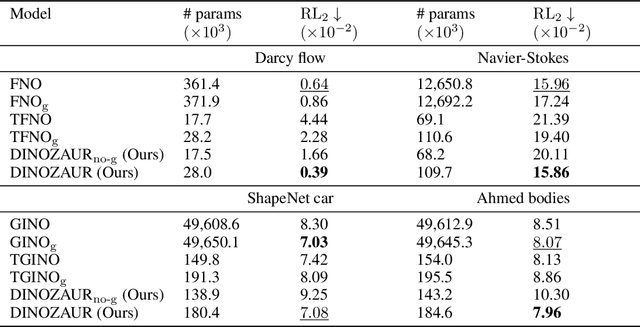

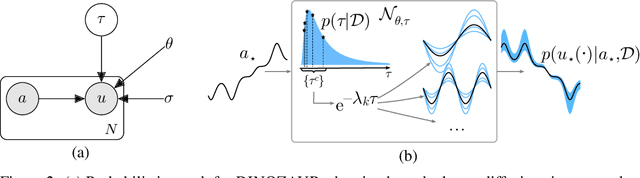

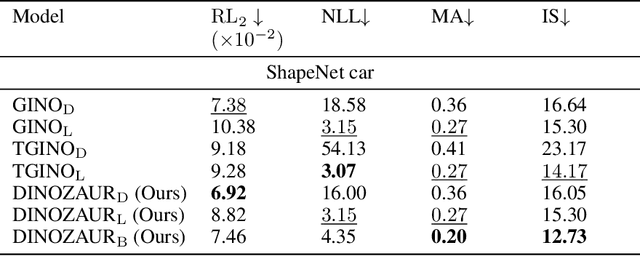

Operator learning is a powerful paradigm for solving partial differential equations, with Fourier Neural Operators serving as a widely adopted foundation. However, FNOs face significant scalability challenges due to overparameterization and offer no native uncertainty quantification -- a key requirement for reliable scientific and engineering applications. Instead, neural operators rely on post hoc UQ methods that ignore geometric inductive biases. In this work, we introduce DINOZAUR: a diffusion-based neural operator parametrization with uncertainty quantification. Inspired by the structure of the heat kernel, DINOZAUR replaces the dense tensor multiplier in FNOs with a dimensionality-independent diffusion multiplier that has a single learnable time parameter per channel, drastically reducing parameter count and memory footprint without compromising predictive performance. By defining priors over those time parameters, we cast DINOZAUR as a Bayesian neural operator to yield spatially correlated outputs and calibrated uncertainty estimates. Our method achieves competitive or superior performance across several PDE benchmarks while providing efficient uncertainty quantification.

Maximal Update Parametrization and Zero-Shot Hyperparameter Transfer for Fourier Neural Operators

Jun 24, 2025Fourier Neural Operators (FNOs) offer a principled approach for solving complex partial differential equations (PDEs). However, scaling them to handle more complex PDEs requires increasing the number of Fourier modes, which significantly expands the number of model parameters and makes hyperparameter tuning computationally impractical. To address this, we introduce $\mu$Transfer-FNO, a zero-shot hyperparameter transfer technique that enables optimal configurations, tuned on smaller FNOs, to be directly applied to billion-parameter FNOs without additional tuning. Building on the Maximal Update Parametrization ($\mu$P) framework, we mathematically derive a parametrization scheme that facilitates the transfer of optimal hyperparameters across models with different numbers of Fourier modes in FNOs, which is validated through extensive experiments on various PDEs. Our empirical study shows that Transfer-FNO reduces computational cost for tuning hyperparameters on large FNOs while maintaining or improving accuracy.

From Theory to Application: A Practical Introduction to Neural Operators in Scientific Computing

Mar 07, 2025This focused review explores a range of neural operator architectures for approximating solutions to parametric partial differential equations (PDEs), emphasizing high-level concepts and practical implementation strategies. The study covers foundational models such as Deep Operator Networks (DeepONet), Principal Component Analysis-based Neural Networks (PCANet), and Fourier Neural Operators (FNO), providing comparative insights into their core methodologies and performance. These architectures are demonstrated on two classical linear parametric PDEs: the Poisson equation and linear elastic deformation. Beyond forward problem-solving, the review delves into applying neural operators as surrogates in Bayesian inference problems, showcasing their effectiveness in accelerating posterior inference while maintaining accuracy. The paper concludes by discussing current challenges, particularly in controlling prediction accuracy and generalization. It outlines emerging strategies to address these issues, such as residual-based error correction and multi-level training. This review can be seen as a comprehensive guide to implementing neural operators and integrating them into scientific computing workflows.

Graph Fourier Neural Kernels (G-FuNK): Learning Solutions of Nonlinear Diffusive Parametric PDEs on Multiple Domains

Oct 06, 2024

Predicting time-dependent dynamics of complex systems governed by non-linear partial differential equations (PDEs) with varying parameters and domains is a challenging task motivated by applications across various fields. We introduce a novel family of neural operators based on our Graph Fourier Neural Kernels, designed to learn solution generators for nonlinear PDEs in which the highest-order term is diffusive, across multiple domains and parameters. G-FuNK combines components that are parameter- and domain-adapted with others that are not. The domain-adapted components are constructed using a weighted graph on the discretized domain, where the graph Laplacian approximates the highest-order diffusive term, ensuring boundary condition compliance and capturing the parameter and domain-specific behavior. Meanwhile, the learned components transfer across domains and parameters via Fourier Neural Operators. This approach naturally embeds geometric and directional information, improving generalization to new test domains without need for retraining the network. To handle temporal dynamics, our method incorporates an integrated ODE solver to predict the evolution of the system. Experiments show G-FuNK's capability to accurately approximate heat, reaction diffusion, and cardiac electrophysiology equations across various geometries and anisotropic diffusivity fields. G-FuNK achieves low relative errors on unseen domains and fiber fields, significantly accelerating predictions compared to traditional finite-element solvers.

Spectral Convolutional Conditional Neural Processes

Apr 19, 2024

Conditional Neural Processes (CNPs) constitute a family of probabilistic models that harness the flexibility of neural networks to parameterize stochastic processes. Their capability to furnish well-calibrated predictions, combined with simple maximum-likelihood training, has established them as appealing solutions for addressing various learning problems, with a particular emphasis on meta-learning. A prominent member of this family, Convolutional Conditional Neural Processes (ConvCNPs), utilizes convolution to explicitly introduce translation equivariance as an inductive bias. However, ConvCNP's reliance on local discrete kernels in its convolution layers can pose challenges in capturing long-range dependencies and complex patterns within the data, especially when dealing with limited and irregularly sampled observations from a new task. Building on the successes of Fourier neural operators (FNOs) for approximating the solution operators of parametric partial differential equations (PDEs), we propose Spectral Convolutional Conditional Neural Processes (SConvCNPs), a new addition to the NPs family that allows for more efficient representation of functions in the frequency domain.

Neural Green's Operators for Parametric Partial Differential Equations

Jun 04, 2024

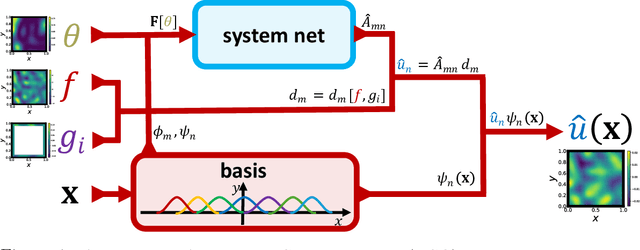

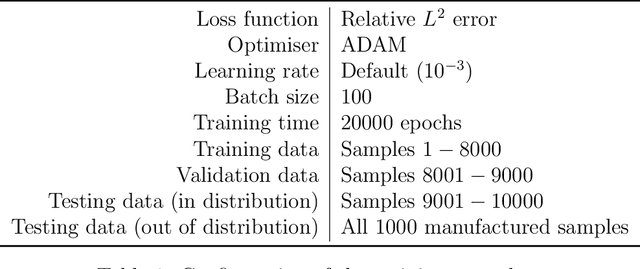

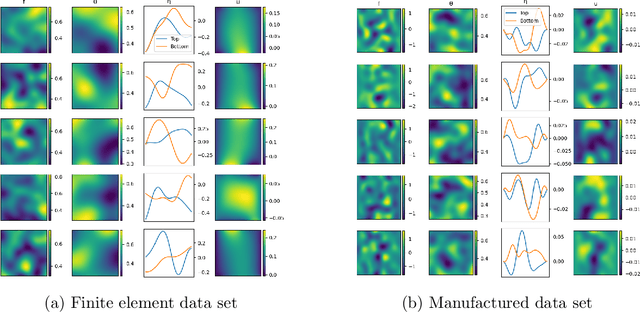

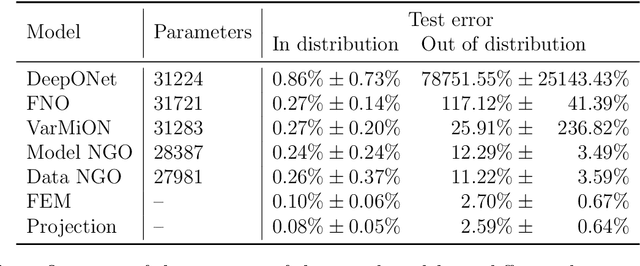

This work introduces neural Green's operators (NGOs), a novel neural operator network architecture that learns the solution operator for a parametric family of linear partial differential equations (PDEs). Our construction of NGOs is derived directly from the Green's formulation of such a solution operator. Similar to deep operator networks (DeepONets) and variationally mimetic operator networks (VarMiONs), NGOs constitutes an expansion of the solution to the PDE in terms of basis functions, that is returned from a sub-network, contracted with coefficients, that are returned from another sub-network. However, in accordance with the Green's formulation, NGOs accept weighted averages of the input functions, rather than sampled values thereof, as is the case in DeepONets and VarMiONs. Application of NGOs to canonical linear parametric PDEs shows that, while they remain competitive with DeepONets, VarMiONs and Fourier neural operators when testing on data that lie within the training distribution, they robustly generalize when testing on finer-scale data generated outside of the training distribution. Furthermore, we show that the explicit representation of the Green's function that is returned by NGOs enables the construction of effective preconditioners for numerical solvers for PDEs.

Learning Flame Evolution Operator under Hybrid Darrieus Landau and Diffusive Thermal Instability

May 11, 2024Recent advancements in the integration of artificial intelligence (AI) and machine learning (ML) with physical sciences have led to significant progress in addressing complex phenomena governed by nonlinear partial differential equations (PDE). This paper explores the application of novel operator learning methodologies to unravel the intricate dynamics of flame instability, particularly focusing on hybrid instabilities arising from the coexistence of Darrieus-Landau (DL) and Diffusive-Thermal (DT) mechanisms. Training datasets encompass a wide range of parameter configurations, enabling the learning of parametric solution advancement operators using techniques such as parametric Fourier Neural Operator (pFNO), and parametric convolutional neural networks (pCNN). Results demonstrate the efficacy of these methods in accurately predicting short-term and long-term flame evolution across diverse parameter regimes, capturing the characteristic behaviors of pure and blended instabilities. Comparative analyses reveal pFNO as the most accurate model for learning short-term solutions, while all models exhibit robust performance in capturing the nuanced dynamics of flame evolution. This research contributes to the development of robust modeling frameworks for understanding and controlling complex physical processes governed by nonlinear PDE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge